Why Elon Musk, Facebook and MIT Are Betting On Mind-Reading Technology

Mind-Reading Technology

So, if we really could have an interface with a computer, with artificial intelligence that could read our minds, you can imagine a case in which we could be immortal. But that would completely change humanity and what it means to be human.

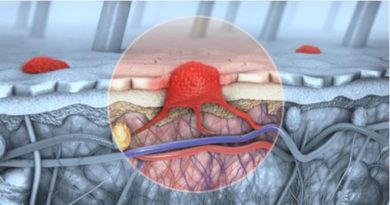

Merging the human brain with a computer would truly change our species forever. Researchers are developing mind-reading technology that can transfer data between computers and our brains, and even read people’s minds. For now, we have the power to detect brainwaves and track electrical pulses within the neurons in our brains, and researchers are using this information, however vague, to aid the differently-abled and make life easier for everyone.

Among these researchers is 16-year-old Alex Pinkerton. Really, all that we’re working on an is a brain-computer interface that utilizes graphene, and hopefully, if our math is correct, it’ll be sensitive enough to read the magnetic fields of human thought. It was in the 1970s that the Department of Defense first started funding brain-computer interface research. That market is expected to reach avalue of $1. 72billion by 2022.

Big players like Elon Musk and Facebook have teased their entrance into the market of mind-reading technology, while other companies are showing their work in action, like CTRL Labs, who created a wristband that measures electrical pulses from the brain to the neurons in a person’s arm allowing them to control a computer.

New and exciting research is pouring out of universities like MIT and the University of California in San Francisco. So, what is a brain-computer interface in the context of mind-reading technology? It’s a way in which a computer can take information directly from the brain without you having to type or speak it in and translate that into some kind of action.

That’s what Pinkerton is working on; a connection between the brain and a device, like your phone or a prosthesis. He was first inspired by his dad who works on clean energy. My dad came in to talk to our class when I was in third grade about graphene, just to give a little presentation.

I’m not sure why, but that sort of sparked my interest and I had just been thinking of like, why isn’t this being used everywhere if it’s like the perfect material? And so I started thinking of applications and at first, it was mainly, like, for the military or something.

Now, it’s sort of focus away from that and to, well, the brain-computer interface and, like, VR maybe, super immersive VR. Obviously, it could help the disabled. Pinkerton is the co-founder and CEO of BraneInterface. For the past few years, he has been spending his holiday breaks and the occasional weekend in his dad’s lab working on his graphene brain-computer interface. I was just at my dad’s office after school and we used a program called Math CAD just to type in a bunch of numbers to fit the characteristics of graphene for a brain interface. That’s amazing mind-reading technology application right there.

And at first it wasn’t working, and so we just kept tweaking and tweaking and tweaking until it finally was able to get to the low magnetic fields of human thought. Graphene is an almost impossibly thin layer of carbon only a single atom thick. We’ve finished two prototypes that haven’t utilized graphene, it’s just Mylar, which is basically just Saran Wrap.

The first prototype can reach 10 to the minus three Tesla, second type 10 to the minus 6. So, really nowhere near. But this new graphene prototype that’s about halfway done, if our math is correct, we’ll be able to reach 10 to the minus 15, which is the level of human thought that we need to have for a successful mind-reading technology.

The goal is to have a mind-reading technology interface that is small enough to fit in an earbud or the inside of a hat that will allow users to use thoughts to control physical devices, like playing music on their phones or to control a prosthesis. People know that it could be used for all these amazing things, but they really haven’t found the killer application and I think that’s what we’ve done. His goal is to keep the costs down so the mind-reading technology can be available to anyone.

Right now, a lot of brain interface technology is super cumbersome, inefficient or expensive, so we’re hoping to get all three of the sort of that thing of out of the way. It’s basically, it can fit in earbud, it can readthe magnetic fields of human thought with noproblem and it’s relatively inexpensive. You can imagine how good that could be for disabled people. They could move robotic arms just by wearing the earbuds and thinking about it, instead of having, well, basically their skull opened up and electrodes put on their brain.

Elon Musk’s version of this technology might beone of those skull-opening options. Neuralink, a company co-founded by Musk, is working to add a digital “third layer above the cortex that would work well and symbiotically with you. “The purpose of Neuralink is to create a high-bandwidth interface to the brain such that we can be symbiotic with A. I. The Neuralink website has been little more than alist of job applications for a while, and itupdate has been teased as “coming soon” formonths. But this technology would supposedlyrequire invasive surgery.

What is seems that they’ve done is they’ve taken rats, and they’ve implanted this kind of grid of electrodes, but they’ve done it using a technique that they call, like, a sewing machine which seems to, like, put these electrodes in really, really quickly, because you have to be really specific when you plant these electrodes. But ultimately, what it seems to be for is a way of, sort of linking brain activity in these rats to some kind of a computer and possibly to each other. And the envelope keeps getting pushed further.

A recent breakthrough at the University ofCalifornia in San Francisco showed howresearchers can read the brain’s signals to thelarynx, jaw, lips and tongue, and translate themthrough a computer to synthesize speech. And in 2018, MIT revealed their Alter Ego device, which measures neuromuscular signals in the jaw to allow humans to converse in natural language with machines simply by articulating words internally.

But how can you recognize certain brainwaves? How can you filter out the “play music” command over the constant noise of thoughts and brainwaves? So, when someone says they have a tool measuring brainwaves, the first thing I want to ask is, “how do you know they’re actually brainwaves, as opposed to just some electrical changes that happen from the head and neck?”Like, for example, from the cranial nerves, the nerves that innervate the head and neck and help you blink, or, you know, feel your face, because the signals from deep inside the brain are harder to get at. See, in the examples from the

The University of California in San Francisco and MIT, both studies focused on computers working out a person’s intentions by matching brain signals to physical movements that would usually activate in a person’s vocal tract or jaw. They’re using signals that would usually trigger muscles to simulate what the body would do. The deep, internal thoughts and processes within our brain are still quite elusive.

Let’s say that we’re trying to create a device that allows us to play music from our Sonos or some speaker system in our house, right? So, how I would imagine telling the Sonos to do that versus how someone else would be pretty different. But you could train me to do it.

And that is exactly Pinkerton’s intent. And you can kind of teach, almost teach the brain to do specific things over and over again. Now, this is not entirely new information. We’ve been hooking brains up to machines to read electrical activity since the 1920s. And brain-computer interfaces? These are tools that people were working on since the 1970s and, you know, there are still a lot of hurdles in terms of making them commercially available.

For a lot of these tools, you actually need to sit very still and you to keep very still. And so that doesn’t have a lot of real-world applications, so I think that that limitation is something that a lot of engineers really are facing, is how do you continue to distinguish signal from noise when the person is moving?

That’s a major problem, but I feel like, in the last 10 years, there’s been a lot more interest in terms of commercial companies trying to create a product that allows this to happen. So, you know an example is a virtual reality, I think that is a pretty clear way in which a BCI might be helpful. Brane Interface said it has been approached by several technologies and investment companies but plans to finish its prototype later this year before seeking those opportunities.